I’ve recently written a library for doing declarative CAD in Haskell.

This is a wrapper to a 3D geometry library, called OpenCascade, which is written in C++.

This involved doing a fair amount of Haskell / C++ interop.

While I’m by no means the first person to integrate code in those two languages, it doesn’t seem to be discussed all that frequently.

I thought I’d write down some thoughts, decisions, what I thought worked well, and what I found challenging.

This isn’t a tutorial on how to do Haskell / C++ FFI, if you’re looking for that, I enjoyed reading this one by Luc Tielen.

I’ve never written this kind of blog post before1. So it’s possible there’s no audience for it, but on the offchance, these are “my thoughts on Haskell / C++ interop”.

C++ vs C

An “Application Binary Interface” or ABI, is a stable format that describes how data structures and functions are implemented. This makes it possible for a program, written with a specific toolchain, to call into a library written with a different toolchain.

OpenCascade has a C++ interface.

C++ doesn’t provide a stable ABI, and it’s not really recommended to try to directly call C++ code from other languages.

The solution is to write a thin C API, wrapping OpenCascade’s C++ API, using the “extern” keyword to define C functions within C++, and writing a bunch of header files which were compatible with both languages.

Unlike C++, C does have a stable ABI, but the “extern” C++ keyword lets you write functions that use this from within C++.

I wrote my C API in a flat folder structure, and adopted a convention of prefixing these functions with hs_ to guard against name collisions.

ForeignFunctionInterface vs CApiFFI

Haskell has two different mechanisms for FFI, both enabled by adding different language extensions, ForeignFunctionInterface and CApiFFI respectively.

These both allow you to declare foreign functions, however CApiFFI generates an intermediary c file, and generates a function name which wraps call to the underlying function.

Whereas ccall/ForeignFunctionInterface generates code based on the function definition, and the platform’s calling convention.

As such, it makes sense that CApiFFI would have better support for macros, probably fails more gracefully if the declaration is wrong, and might be more tolerant to nuances of the platform’s calling convention.

The Haskell wiki discourages the use of ForeignFunctionInterface / ccall over CApiFFI.

So I went directly to using CApiFFI rather than touching ccall.

Using CApiFFI feels a bit like an unnecessary layer of indirection, in that I’ve wrapped OpenCascade’s C++ API in my own set of C functions, only for CApiFFI to add it’s own additional layer.

Despite this, I’m still confident that CApiFFI was the right choice, because if I’m introducing myself to Haskell FFI, I want to use what ever the most “modern” approach is.

Data.Acquire vs ForeignPtr

Because most of the wrapped methods were doing allocations, I wanted some “Haskell Friendly” of tracking, and eventually freeing the C++ objects.

I went with Acquire from the resourcet library.

Acquire is based on a post by Gabriella Gonzalez.

There were alternatives to this.

I could have used the bracket pattern

This would have come with the advantage of not adding a library dependency.

But on the other hand, would have been a bit tedious by comparison, and actually left me with even less control over when memory was freed vs Acquire.

Haskell also contains a “smart pointer” like mechanism for memory management called a ForeignPtr.

ForeignPtr differs from the plain Ptr type, because it associates each pointer with a finalizer, which is a routine that’s invoked when there are no references left pointing to the ForeignPtr.

The finalizer could be used to call a C++ destructor.

I was reluctant to use this on my first Haskell C++ project, because I was worried it would mean pairing the potential challenges of debugging C++ deallocation problems, with the challenges of debugging GHC’s runtime system.

I only have the one regret about using the Acquire monad:

It’s now relatively complicated for me to write “pure” functions that use OpenCascade data structures.

In theory, there’s nothing “impure” about defining a cube, doing some transformations on it, and then calculating the location of the center of mass.

However, having decided to wrap all my calls into OpenCascade in the IO monad, there’s no way for me to do this2.

In general, I’m pretty happy about using the Acquire monad, and think this was one of my better decisions,

At the same time, I’d like to try using ForeignPtr at some point, to get a practical understanding of the tradeoffs.

Typing the FFI definitions

I went into the project not knowing a huge amount about OpenCascade’s API.

My thinking was that calling into C++ would be easier if anything that went over the FFI boundary was either a primitive type (int/double/bool), an enum (basically just an int), or a pointer.

I’m almost certainly doing a whole bunch more heap allocations than I strictly speaking need to be doing, especially because many of the OpenCascade types are small “3d vector” types, which could probably be safely passed around the stack, but I think this basically paid off.

Haskell FFI comes with a mechanism to assign types based on C types. Because of my “only primitives and pointers go over the FFI boundary” rule, I didn’t touch this. Instead, I used empty Data declarations to create phantom types, and used these instead.

Enums

Under the hood, C++ Enums are basically just integers, so Enum’s were the one exception to my “everything that goes over the FFI boundary has to be a primitive value, or a pointer” rule.

I wound out completely redefining every C++ enum as a Haskell enum (e.g. TopAbs.ShapeEnum), deriving instances for Show/Eq/Enum and using the fromEnum and toEnum to convert between my types, and the C++ enum.

I’m still wary that this may be a little fragile, as I’m dependent on the order of enum values in my code, and the OpenCascade code staying consistent over time.

I’ve got my fingers crossed that this won’t come back to bite me. Hopefully the OpenCascade developers don’t make any backwards incompatible changes to the enum ordering, but if they did, this could lead to a very thorny bug.

There are a bunch of mechanisms for auto generating Haskell code from a C definition, for instance c2hs.

I didn’t look into these, as I thought the relatively complex C++ in OpenCascade might stretch them, and my main concern isn’t writing out the function definition, it’s tolerance to change

I’d have to call c2hs on every build, in case there were changes to OpenCascade, and I also didn’t want to add this complexity into the build pipeline.

C++ Compiler Flags

Using the default settings, A C-compiler doesn’t treat an attempt to call an undeclared function as an error.

The upshot of this, is if you make a typo when referencing a C Function in Haskell source code, while this will error, it fails in the link stage. It’s not particularly obvious which line or file contained the typo.

There’s a C-compiler option, -Werror-implicit-function-declaration, which makes this fail more quickly.

However, it took me a while to figure out how to pass this argument to the C-compiler where it’s needed, i.e. compiling the stub functions generated by the capi ffi statements, as opposed to compiling the bundled C++ code.

Adding it to the hpack cc-options or cxx-options fields seem like they should work, but these only effect the compiler used to build bundled c/c++ code, and doesn’t seem to effect the capi stubs.

For some reason, adding it to the cpp-options hpack field does actually result in the desired behaviour.

This confused me for ages, because this field should be used to configure the c-preprocessor, which can be used to preprocess haskell files.

And adding -Werror-implicit-function-declaration here resulted in a warning that “-Werror-implicit-function-declaration is not portable C-preprocessor flag” which Hackage treated as an error, briefly preventing me from uploading the project.

I’m pretty sure the fact that this “sort of worked” is a bug.

As far as I can tell, the “correct” way to pass this as a flag is to set the GHC option -optc -Werror-implicit-function-declaration.

-optc ⟨option⟩ is documented only as “Pass ⟨option⟩ to the C compiler”,

and I can’t see anyone else talking about using it to enable this warning.

So while this seems like a totally reasonable thing to configure, I’m not 100% sure that I’m not still doing something weird.

While investigating this, I also discovered that if you use the hpack c-sources field (intended for C code) to include C++ files, rather than cxx-sources, while this works fine, it seems to prevent either the cc-options or the cxx-options settings from being applied.

Programming in C++ means memory safety issues, Haskell is (not) the cure

Programming in C or C++ comes with a certain set of challenges that are solved in more modern languages. One example of these is the risk of introducing dangerous memory safety bugs. Because C++ exposes direct 3 access to memory, it’s fairly easy to accidentally access invalid addresses.

One of the reasons for wanting to wrap a C++ project (like OpenCascade) in a higher level language is to avoid this category of bugs.

Also being able to compile the C++ as part of the Haskell build process meant I didn’t have to mess around with C++ build tools.

With that said, while wrapping the API, I still ran into my fair share of “dangerous C++ bugs”.

Even with Data.Acquire providing an abstraction around memory management, it’s still possible to run into memory safety issues.

In theory, the “thick layer of Haskell” should mean that consumers of the high level API are isolated from these, working at the boundary with the C++ means you’re still exposed to C++ levels of risk.

How (not) to structure a project

I decided to split the project up into a thin wrapper for OpenCascade, which would expose almost the same API as the C++ library, and a separate high level library with a more functional API.

This was a partially a way to force myself to keep the functions at the FFI boundary as close to the C++ API as possible. But it also means that if anyone else wants to build a different Haskell library based on OpenCascade, they might be able to reuse my low level library, if they want.

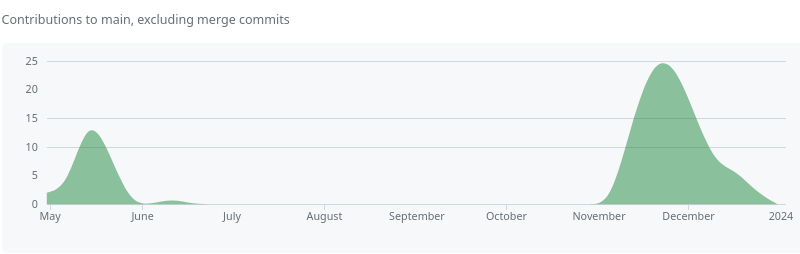

I 100% goofed though, because I set out trying to complete the low level wrapper before starting the high level API, which lead to this happening:

The complete OpenCascade C++ library has an API that’s split over over 8000 header files.

$ ls /usr/include/opencascade/ | wc -l

8276I was never going to be able to extract all of these, and while I had vague plans to implement some superset of the modules used in the OpenCascade Bottle Tutorial, I didn’t really have a clear idea of how much of the API I really needed to wrap.

I eventually got fed up, and abandoned the project for six months.

When I finally picked it up again, I decided to change tack, and only implement the methods that I needed to build the high level library that I wanted.

I knew, even back when I was working on the core, that this wasn’t a good approach, and that I should have found a way to work more incrementally.

I wound out naming the high level library “Waterfall CAD”, partially as a play on “Waterfall Development”. Parodying my approach of trying to write the whole low level wrapper without a plan for how I was going to use it.

The whole “extract a low level core, first, in it’s entirety” approach might work for some projects, but probably not one based on such a large underlying library, and it certainly didn’t work for me.